Inner JOIN-xiety and AI Reflections

I feel compelled to write about AI and its role in software development. AI has become a HUGE topic over the past few years. I can’t think of a technology that has gained so much attention so fast. There are many reports that AI is writing large percentages of code for their organization or that companies can create software with a tenth the number of coders as before. Honestly, in my little world and limited view, I’m not seeing it. Though, I agree, what we have today is quite amazing.

Coding with AI

if you cannot code without AI, you cannot code.

I find AI code generators extremely helpful… for some things. For me, it is really great for complicated SQL. But I’m not very good at SQL. I never learned it well and fortunately, non-relational databases gained popularity before I had to force myself to git gud at it. Similarly, when I use generative AI to create a picture for me, it is because I am not an artist. It is the same with spelling. I rely heavily on spell correction. If I continue to use these tools, I will likely never be an artist or be able to spell much beyond my own name or get over my INNER JOIN-xiety.

I find the experiment by Eric Klopfer to be poignant. Even if you can coerce AI to create something that works for you, it won’t make you a programmer. You won’t be able to effectively maintain, document, debug, modify, or even understand code. It is wishful thinking to believe that an LLM will do those things for you.

In college, I was a teaching assistant for a computer graphics programming class. After a while, I realized that there were some students who were essentially getting me to write their code. It was clear they had little to no programming skills and definitely didn’t understand the material in the class. Unfortunately, they weren’t any better off after I had __ helped __ wrote their code for them. They used up a lot of my time that I could have spent helping others and I’m sorry it took me so long to figure out what was going on. Unlike me, LLMs don’t care about being used. It could be argued that LLM companies are using us.

AI’s Leaky Bucket

One of my favorite stories is Assimov’s Foundation Series. One of the concepts in the books is that technology and machines can become so long lived and self-maintained that people forget how they work. Once the machines begin to break down, no one knows how to fix them and they have no understanding of the scientific principles to innovate and create new technologies. In one part of the story, a man with a tiny personal nuclear shield visits a planet where no such devices exist because the people do not have sufficient technical knowledge. However, this planet has huge nuclear generators that power cities and planetary shields. Such large reactors and shields are incomprehensible to the man with a tiny nuclear reactor. His people do not have the technical knowledge to produce such large scale reactors. The irony is that both technologies came from a shared civilization centuries in the past who did understand the technology well enough to produce either reactor. The modern civilizations, through neglect of educating themselves, became limited by a technology that mostly took care of itself. The knowledge of the old civilization leaked away. Maybe, one day, AI can become good enough and generalized enough to innovate and communicate well enough to overcome this problem, but we’re not there; not even close.

Vibe Coding is Vape Coding

I’ve had AI autocomplete whole functions for me just by typing in a partial function declaration. It is a little spooky when it does that. I think this is what companies are saying when they claim that AI is writing some large percentage of code. It is a bit of a fudged statistic. At best it is a good auto complete tool. This autocomplete hasn’t saved me much, if any, time. I can type pretty fast (typing proficiency is an under-appreciated programmer skill) and I have to spend as much time reading the generated code as I would if I wrote it myself. In any case, the value of a good programmer isn’t about writing code, it is about being a domain expert. It is about being able to communicate what the code does to others and about intuiting what is happening when things go wrong and how to apply existing solutions to new problems.

Token limits and a lack of reasoning capabilities will prevent LLMs from being able to take the place of programmers. Arguably, LLMs can improve or enhance developer efficiency, but, even leaps in LLM improvements will not allow them to replace developers.

A Lossy Compression Problem

Some argue that AI can do this, or that one day it will be able to do this. However, the token limits of LLMs make this impossible beyond very simple code. It is a Lossy Data Compression problem.

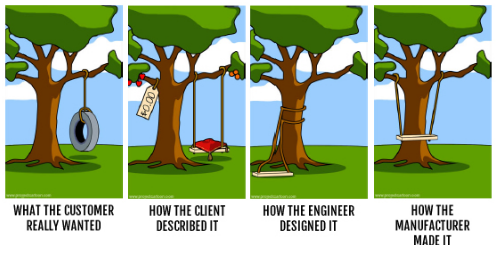

English is not a programming language for a reason. English (or any other “human” language) is not machine language because it has ambiguity and subjectiveness; it is lossy (at least how we use it is lossy). Any English statement has entropy. Generative AI hallucinates, partly, because the language we use to communicate with it is ambiguous and AI has no choice but to fill in the gaps. I am not claiming generative AI wouldn’t hallucinate even if we used an unambiguous language, but I am claiming that if we used an unambiguous language we wouldn’t need Generative AI. It is impossible to go from an ambiguous language to an unambiguous language without making stuff up. It is the classic Engineering Tree Swing problem.

Fortunately, English can be filtered and distilled into unambiguous languages. That is what programmers do. A programmer’s job is less about writing code and more about removing ambiguity from human language and translating it into machine language. They disambiguate requirement documents by working with product teams or system engineers. The number of spoken and written words to do this is in the billions for a small project and easily in the hundreds of billions or trillions for larger projects. Programmers take billions or trillions of human language words and produce millions or billions of machine tokens. LLMs cannot handle this size and if they could, it would be very expensive.

But What If

If we assume that AI gets to the general or super intelligent point where it can economically produce correct, maintainable, and efficient code by handing it requirements documents and having back and forth conversations with product managers or systems engineers, then the question isn’t will it replace developers. The question is what should we do with such an awesome tool. Worrying about job loss would be looking a gift horse in the mouth.

Tangents

A History of Unfulfilled Promises

I don’t want to go into the history too much, but the perception of what AI can do has gone through a lot of ups and downs. In the 1950s-60’s, the optimistic years of AI, people thought there would be general thinking machines within 10 years. We’re over 50 years behind that estimate. The let down was so big, that it took decades and rebranding AI into terms like Machine Learning and Soft Computing to get attention to it again (I think those are much better terms for the technology).

Do We Even Know What We’re Trying to Create

I don’t think we have a good enough definition of “intelligence” to claim we can create “artificial” intelligence. If we can’t define intelligence how can we claim to create it? The Turing Test doesn’t tell us if something is intelligent, it just kind of gives us a way to say something appears to be intelligent to some people. Many humans can’t pass the Turing Test. The book “Blindsight” brings up the notion that we might not even recognize intelligence if it were right in front of us.

Generalize/Super Artificial Intelligence Tangent

In science fiction there have always been competing theories between benevolent and malevolent thinking machines. From The Terminator to The Transformers. My favorite contrasting stories are between Asimov’s “Robots” and Herbert’s “Thinking Machines” (I’m referring to the book versions, not the movie/show versions). The Robots are ruled by a sense of humility and ethics. The Thinking Machines are ruled by a sense of superiority and efficiency. Ironically, both AI incarnations end up shaping the future of human civilization living without AI.

Accurate AI Predictions

So far, of all the SciFi AI stories, IMHO, the one that seems to be the most prophetic is The Matrix. We are literally creating large amounts of energy to power AI. The only difference between the Matrix and real life is that in real life, AI hasn’t had to physically capture us to do it.